Kevin Russell and myself had the opportunity to interview Ph.D Ido Bachelet from the Bar-Iran Institute of Nanotechnology and Advanced Materials. Dr. Bachelet and his team are developing a new form of cancer delivery system that has the potential to eradicate cancerous tissue from the body without damaging healthy cells.

However, before I begin, it’s important to understand that all of the technologies we are going to discuss are not science fiction, but science reality.

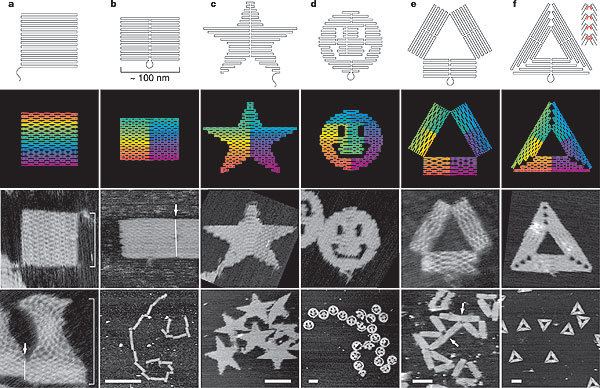

DNA origami is a technique that allows scientist to use DNA molecules as programmable building blocks, which make use of the programmable molecular recognition of complementary DNA cohesion to assemble designed structures. By taking a single strand of DNA, scientist are able to manipulate the genetic code, telling the DNA to self-assemble into predetermined shapes. In order to do this, scientist use software that is similar to CAD. It programs the DNA and tells it to fold back and forth into a desired shape or pattern.

Almost seven years after the original technique of DNA origami was developed by Paul Rothemund at the California Institute of Technology, Dr. Ido Bachelet and his team evolved the concept of DNA origami into a radical new drug delivery system. In Dr. Bachelet’s recent publication ‘Designing a bio-responsive robot from DNA origami‘ his team was able to take the genome of a virus as the primary building block of his structure and create a cage like scaffolding that has the capability to house life promoting drugs such as antibiotics and chemotherapy medicines.

However, these nanorobots not only have the ability to house powerful medicines; they can also deliver the drugs to the precise location that requires healing.

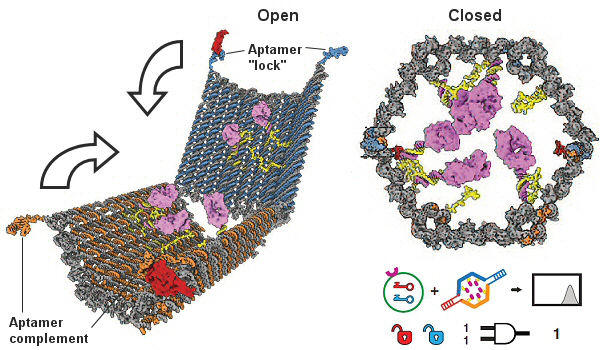

The current version of these nanorobots are free floating robots that float through the bloodstream by the billions and remain neutral until they encounter a location that requires assistance. The nanorobots know that they have reached the proper location by molecular cues that are programmed into them to move from their closed neutral state to its open state (See image 2 below). These molecular cues act as the key to activate the neutralized nanorobot into combat ready mode, and tell it to treat the infection site, delivering the drugs directly to the cancerous spot or site of infection.

Currently, one of the primary problem with chemotherapy is that the drugs being injected into the patient are not only killing the rogue cancerous cells but healthy cells as well. By taking a sample of the cancerous cells, or by knowing the specific molecular markers of the rogue cells, scientists are able to program the nanorobots to only attack the enemy cells with a specific payload.

The idea is that the nanorobots don’t excrete the drug or release it. Instead, they make the drug accessible or inaccessible by turning it on and off. Because the drug is linked to the robot, one could think of it as a sword and the wielder. As the nanobot prepares to attack the cell that it was programmed to destroy, it enables its sword (the drug), that attacks the cell and then sheaths the drug again, leaving all of the healthy cells around the infection site unaffected by the potent chemotherapy drugs. Once could also think of this technology as predator dronedrone that have the ability to hone in and wipe out any enemy insurgents while leaving the healthy citizen population unaffected by the combat.

I’m sure some of you are asking ‘what happens when these nanorobots have achieved their objective? I don’t want millions maybe even billions of loaded nanorobots with powerful chemo drugs floating in my body.’ The nanorobots have a half-life of an hour or two, but scientist can modify them to live up to 3 days before they start the disintegration process, which is via enzymes. These enzymes slowly start to form segregates about a half-micron in size (size of bacteria). As they slowly dismantle the nanobot, the payload is gradually released into the body at non-lethal doses until the enzymes have completed their task of disassembling, leaving the body free of the cancer and of any nanorobots.

FUTURE IMPLICATIONS

The current model of nanobots are extremely efficient in disengaging certain types of cells or delivering payloads to specific sites in the body. However, for diseases such as Alzheimer’s disease or Parkinson’s disease, where the body suffers a death on the molecular level, these nanobots are non-effective. In the future, it is possible that we will see an all-in-one nanorobot package. These nanobots would not only have the ability to destroy cells but promote the rejuvenation of cells without increasing likelihood of tumors or cancers as well.

Another additional future functionality that we will see in coming nanorobot versions is the ability to direct or steer nano particles to the precise location that requires treatment. Technically, this would be creating a new surgeon; the Nanorobot Surgeon. These doctors would have the ability to cut, stitch, and sample cells without ever having to perform what we consider modern day surgery. Dr. Bachelet and his team have already connected these nanorobots to an Xbox controller, acting as the conductor to a symphony of nanorobots working in unison to eradicate cancerous cells. These systems of controlling these nanorobots will grow in complexity and sophistication, completely changing the coming face of healthcare around the world.